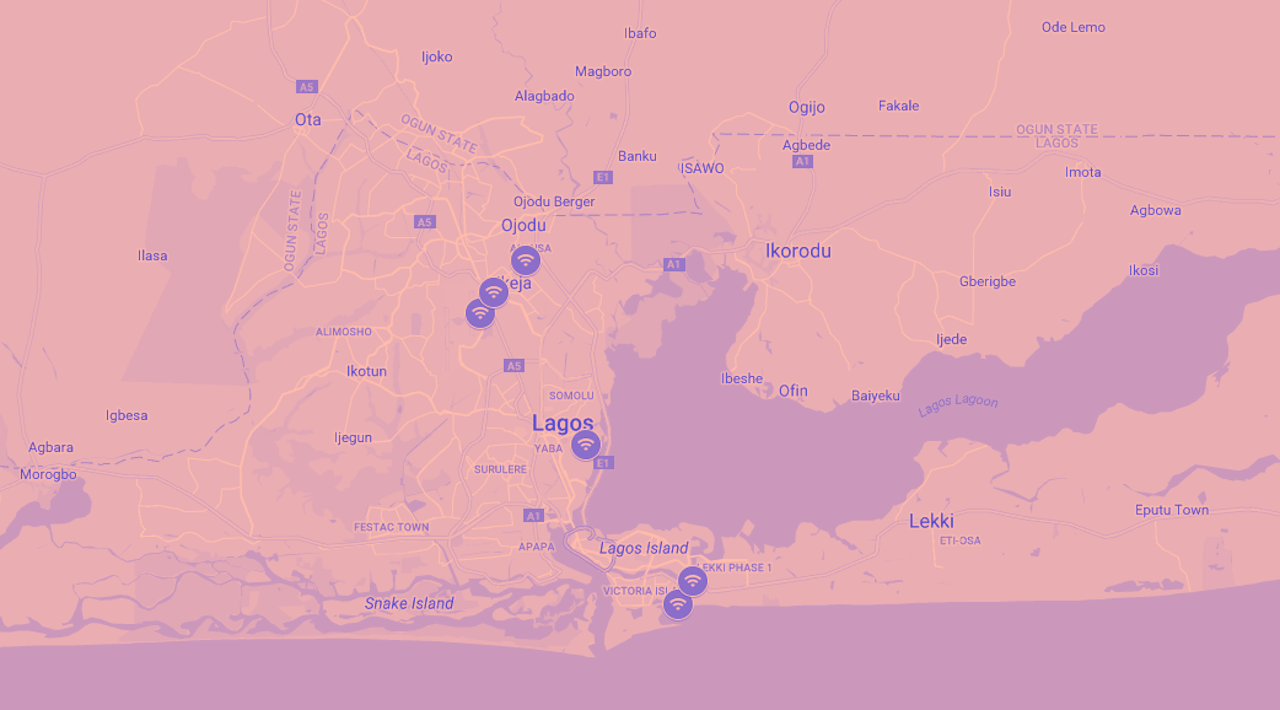

Google is bringing free public Wi-Fi to Nigeria through Google Station, an initiative launched in 2015 that’s also brought public Wi-Fi to countries like Mexico, India, and Thailand. The initiative provides a free alternative to Facebook’s Express Wi-Fi program, which launched in Nigeria in 2017 and involves data fees. Google profits from arrangement through ads delivered through the service, and Wi-Fi use data-sharing with Google Station partners, which include Wi-Fi hardware companies, and in Nigeria’s case, telecom companies.

Tech companies are rushing to expand their services to countries around the world through providing internet access, but they also have a pattern of botching these rollouts by not managing how they amplify fake news, extreme fringe content, hate speech, and political unrest — especially in politically sensitive countries, whose users then pay the price for violence instigated online.

In Nigeria, terrorist groups like Boko Haram has been responsible for thousands of murders of women, religious minorities, and random civilians for over a decade, and they have been known to uses digital platforms like Facebook, Telegram, and YouTube to promote ideological propaganda.

Google has met with the Nigerian federal government regarding fake news, but it also has a history of being a relatively poor steward of how information is managed online. It has had long-term issues in making sure that its black-box Google News algorithm displays real news in its prioritized “Top Stories” and not, well, conspiracy theories from anonymous, unmoderated forums like 4chan in the wake of a terrorist event. There are real risks associated with displaying fake news during sensitive times. Geary Danley, an innocent man, was defamed after being falsely identified on Google News and Facebook as the gunman who killed fifty people in Las Vegas concert.

As reported by The Outline, Google has a track record of laying the blame for these mistakes on the Google News algorithm itself. It tends to prioritize what's new, and when there's few results about a breaking news event, fake news is more likely to float to the top of the results. But this algorithm is still designed by real people, capable of curating it for accuracy and blacklisting sites with a vast record of hate speech and misinformation.

Historically, tech companies have underinvested in employees and resources for international rollouts of their services — which becomes a huge problem when potentially violence-inciting fake news and hate speech spreads on their platform, and just a couple dozen employees speak the native language in their home country can address it. In the case of companies like Google and Facebook, it’s not clear that there’s a roadmap to expanding platform moderation in proportion to platform access. Google did not immediately respond to request for comment, but The Outline will update this article if we hear back.

The stakes for appropriate news and content moderation couldn’t be higher. Myanmar — where thousands of people within the Rohingya ethnic minority has been the victims of an ethnic cleansing crisis, driven partially by unregulated, unmonitored calls to violence on Facebook, which was made available at no data cost to citizens starting in 2016 — serves as a grim example of what happens when tech companies eagerly expand to countries without proper hate speech content moderation, or even employees that speak these countries’ native languages.

In a recent interview with Kara Swisher from Recode, Mark Zuckerberg said, “I think that we have a responsibility to be doing more [in Myanmar]... We have a whole effort that is a product and business initiative that is focused on these countries that have these [violent] crises that are on an ongoing basis.” Facebook (now) acknowledges its responsibility to moderate content in high-risk areas. But what’s Facebook’s criteria for high risk areas? Is there plan to roll out country-specific content moderators and fact checkers in proportion to platform use in a country? There isn’t a standardized formula or procedure for either task.

Facebook failed to address the misuse of its platform to accelerate ethnic cleansing in Myanmar, and Google also has an alleged record of dropping the ball when it comes to addressing the problem. Reporting by Devex revealed that U.S. ambassador to Myanmar Derek Mitchell claims he reached out to Eric Schmidt, former Google and Alphabet executive chairman who Mitchell had met, several times regarding online hate speech plaguing the country, but Google declined to act.

“Can you deal with hate speech? Can you deal with negative speech and misinformation? Can you figure out a way?” Mitchell asked Schmidt, according to Devex. Granted, Facebook is the primary way that users in Myanmar use and navigate the internet, not Google. By far, the most significant portion of the blame for digitally-spread hate speech falls on Facebook. However, the unwillingness to cooperate with government in the midst of a crisis of violence sets a disturbing precedent. What happens when Google is one of the primary ways that people access the internet?

But there’s no easy solution to providing a platform safety. Even real news can incite violence in the wrong hands — as Boko Haram has a history of murdering Nigerian journalists who cover their activities — and it’s not clear what course of action Google could take to prevent this type of platform misuse.