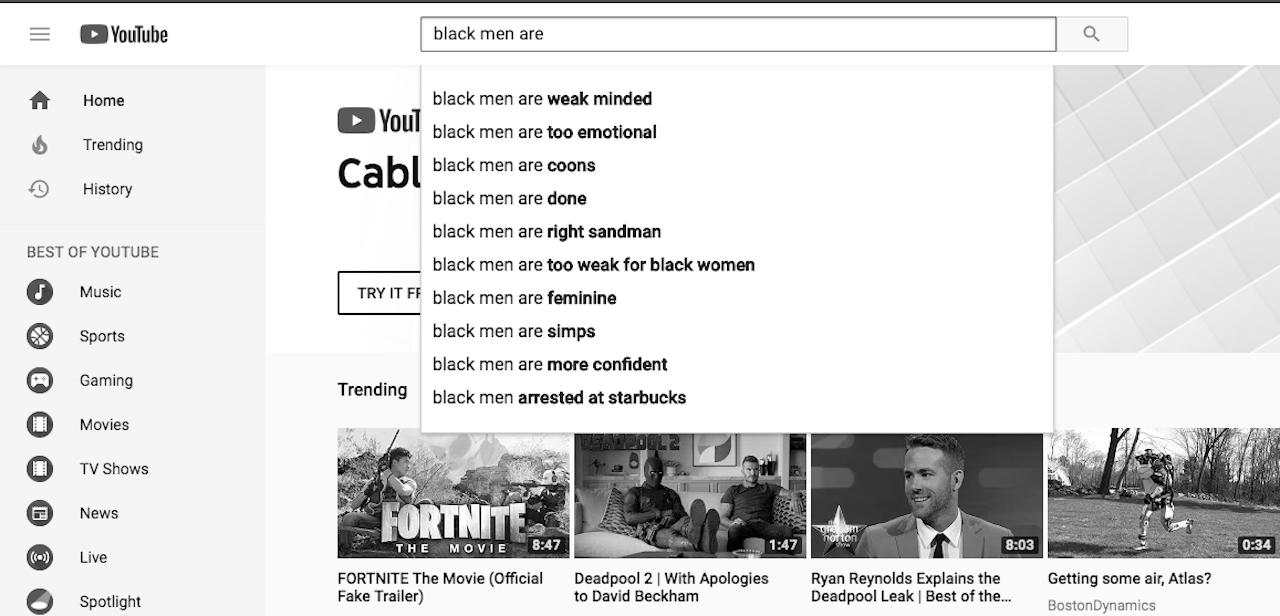

Type the perfectly innocuous phrase “black men are” into YouTube’s search box, and the site will automatically suggest a number of racist results, such as “black men are weak minded,” “black men are too emotional,” and “black men are coons,” among many others. The same is true for “black women are,” which returns suggestions like "black women are the least attractive race," "black women are not marriage material,” “black women are no good,” and “black women are toxic to black men.”

Professor and researcher Jonathan Albright was the first to discover the suggestions and tweeted about them early Sunday morning. The Outline tested the search on multiple different devices and browsers, all in an incognito or private browsing mode, in order to ensure the search results were not related to a particular user’s browsing history. The results remained unchanged no matter the browser, account, or device.

Albright mentioned that a search for “chinese are” also returned disturbing results, such as “chinese are terrible people,” “chinese are african,” and “chinese are all the same.” The Outline discovered that a variety of other race-related searches — like “asian men are” and “white women are” — prompts YouTube to suggest racist and concerning results:

None of these searches were perfectly replicable on other Google platforms, like Google search, raising the question of why these results would surface solely on YouTube. Though the company is famously tight-lipped when it comes to the innerworkings of its products and search algorithms, Google offered some insight into the processes behind Google Search (which is technically different than YouTube Search) in an April 2018 blog post. “The predictions we show are common and trending ones related to what someone begins to type,” explained Google’s Public Liaison for Search, Danny Sullivan. “We have systems in place designed to automatically catch inappropriate predictions and not show them. However, we process billions of searches per day, which in turn means we show many billions of predictions each day. Our systems aren’t perfect, and inappropriate predictions can get through.”

It’s worth noting that this is far from the first time YouTube and Google have come under fire for serving users disturbing autocompletes. Last November, a YouTube search for “how to have” autocompleted with the pedophillic phrase, “how to have s*x with your kids,” and Google itself has long struggled with its system’s tendency to suggest racist and sexist results. From suggesting users search “are jews evil?” when typing in “are jews,” to autocompleting the phase “islamists are” as “islamists are terrorists,” the company has suffered scandal after scandal over its algorithm’s bizarre predictions.

Though the company has made numerous changes to its prediction and user-reporting tools, they’ve never been truly foolproof. In April, Google announced it would be expanding its definition of “inappropriate predictions” in an attempt to stop erroneously offending users, but results such as these seem to indicate it still has a lot of work to do.

Update 05/14/2018 5:00PM “On Sunday our teams were alerted to these offensive autocomplete results and we worked to quickly remove them,” a YouTube spokesperson told The Outline over email. “These suggested results should never have appeared on YouTube, and we are working to improve our systems moving forward.” The company is investigating the cause of this issue.