Elon Musk thinks artificial intelligence poses a mortal danger to human civilization. Alibaba founder Jack Ma thinks AI could be responsible for World War III. Bill Gates supports a robot tax. Mark Zuckerberg believes AI will make our lives better.

But as the debate over the possibly apocalyptic consequences of building human-like intelligence rolls on, one thing has been curiously absent: the voices of engineers with actual, hands-on experience in designing AI.

“Artificial intelligence” is a bit of a catch-all term today. Robotics, deep learning, and things like Siri all get lumped together under the label of “AI.” But in the 1960s, researchers had a much simpler goal: to build machines and software that could replicate the cognitive function of the human brain. This is what’s commonly referred to as “general AI” or “strong AI” — an artificial intelligence that, once programmed, can learn, reason, and make decisions autonomously.

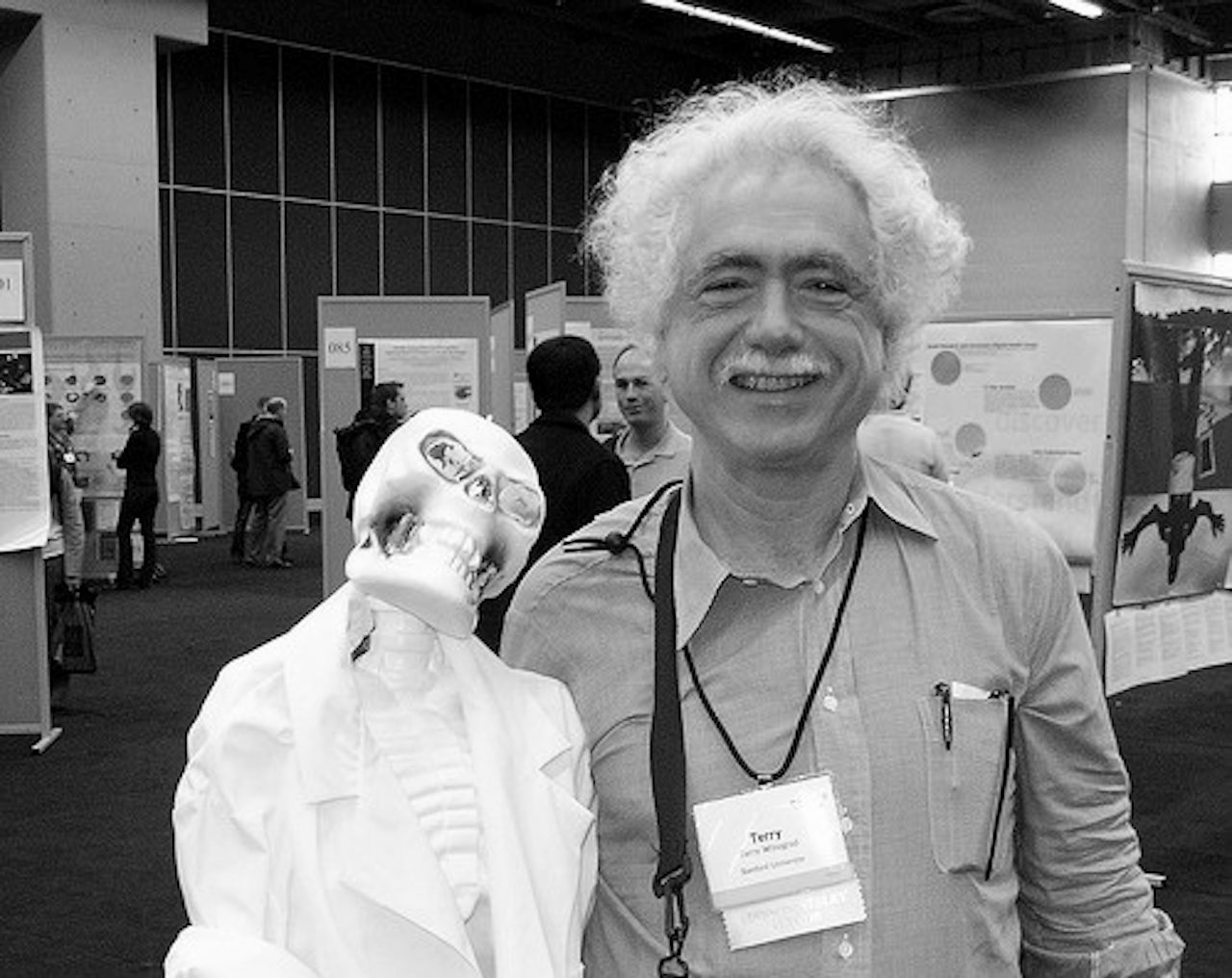

However, creating a general AI proved to be so difficult that it led to widespread disillusionment. As a doctoral student at MIT in the late 1960s, Terry Winograd created SHRDLU, a pioneering early advance in the development of natural language software. SHRDLU, whose name was an inside joke drawn from Mad magazine, carried on a simple dialogue with a user about a small world of block objects. The program made Winograd an authentic star in the emerging field of AI, but throughout the 1970s, Winograd began to question the fundamental approach researchers had developed to designing AI systems.

You can hear our interview with Terry Winograd on our daily podcast, The Outline World Dispatch. Subscribe on Apple Podcasts or wherever you listen.

In 1986 Winograd, with Chilean political philosopher Fernando Flores, published Understanding Computers and Cognition: A New Foundation for Design. Drawing on philosophy and linguistics as much as computer science, the book was a critique of the then-dominant, “representational” approach to AI — the idea that thought can be captured via a formal representation in symbolic logic, which reduces the human mind to a device operating on bits of information according to formal rules. Winograd became the first of the technology’s true pioneers to desert the faith. Eventually he became a leader in the fields of “IA” (“intelligence augmentation”), which aims to create technology that can assist humans rather than replace them, and human-centered design, a now-common approach to software design that puts users’ needs and requirements at the center of product development.

“Musk is more on the ‘clickbait’ end of the public discussion about AI.”

Winograd, who retired from the Stanford computer science department in 2014, spoke to The Outline about the state of AI and what his seminal contribution to the first historical backlash against AI can add to today’s debate.

AI has been around since the 1960s. Why is AI suddenly fashionable again after decades in the shadows?

After the initial wave of optimism that greeted the first big steps forward in AI in the 60s and 70s, the promise didn’t show up. Even fairly mundane tasks in the real world didn’t yield many results. The wave passed by. Neural nets [and many of the other technologies leading the latest wave in AI innovation] existed in the 60s but didn’t get far because of a lack of computational power/sophistication. The fundamental advance of the last 10 years is the amount of computational power and the amount of data it can be applied to. Engineers are now getting notable results — self-driving cars etc.

How close do you think we are to achieving “general AI”?

I’m still in the agnostic phase — I’m not sure the techniques we have are going to get to general AI, person-like AI. I believe that nothing’s going on in my head that isn’t physical — so in principle if you could reproduce that physical structure, you could build an AI that’s just like a person. Today’s techniques are not close to that in a direct sense. Everybody knows that my brain does not operate by having trillions of examples. The mechanisms that work for AI practically today aren’t mirrors of what goes on in the brain.

How do you judge this moment in the public debate about AI? Is all this fear-mongering a useful contribution? Is it fair? Is it silly?

Having those questions out for discussion is good, getting large amounts of hysteria and publicity isn’t. The question is: How do you raise these issues in a thoughtful way without saying, “Skynet is upon us”? Musk, I think, is more on the “clickbait” end of the public discussion about AI. But I do believe that AI is facilitating huge problems for our society — not because it’s going to be smart like a person but because robotics is going to change the whole employment picture, and because the use of AI in decision making is going to move decision-making toward directions that may not have the element of human consideration.

Thirty years ago you and Fernando Flores published Understanding Computers and Cognition. The essence of your critique was that engineers were building a form of intelligence, but not something that captured, in machine form, the richness of the human experience. Do you think the world of AI has absorbed the lessons of the book?

AI not necessarily, but the world of software/tech has. Our critique was a philosophical critique of the representational approach to AI — it was not a statement about whether, in general principles, there could be an AI. The book was mostly written in the late 70s, before the Macintosh came out, before the field of human-computer interaction emerged. I think there’s been a huge evolution in the way that people think about designing computers for humans which either explicitly or more often implicitly does take into account some of the Heideggerian views in the book. In the decades since the book was published, the design community has really taken on the philosophy that you need to learn by testing with real users. That path of saying, “How do people interact with tools? How do people interact with objects? How do people interact with each other? How do we facilitate that?” — that’s where computing has mostly gone until the recent AI surge. But that [general trend in design and computing] went on a separate path from AI — that isn’t considered AI, it’s not part of the AI discussion.

How open do you think the AI community is to new thinking about design and the societal dangers AI poses?

Education can help. The symbolic systems program at Stanford [in which computer science students take classes in philosophy, linguistics and other non-STEM disciplines] is a good way to get broader thinking involved in tech. Even then there’s a fundamental issue of human nature which gets in the way here — if you’re succeeding pretty well at what you’re doing, you’re not interested in changing. To the degree the tech community says, ‘Look at all these great successes, look at all the wonderful stuff we’re doing,’ and you say, ‘But wait a minute, you’re limiting yourself’ — it [that critique] doesn’t grab, except for those who have a social understanding.

How does that conversation evolve in a way that the AI community is forced to think more carefully about these issues?

You have to wait for breakdowns. Facebook [and the controversy over its role in publicizing fake news during the U.S. presidential election] is a good example. Yes, [Mark Zuckerberg] didn’t intend bad consequences, but look how the algorithms are creating bad consequences. The problem you thought you were solving doesn’t approach the problem as it’s framed differently. That problem Facebook is experiencing now does open the question of how you think in broader terms. It’s unfortunate, in a way — you don’t get response until you have a breakdown, until there’s clearly enough wrong that you ask, “What should I do differently?”