Peter Shulman, an associate history professor at Case Western Reserve University in Ohio, was lecturing on the reemergence of the Ku Klux Klan in the 1920s when a student asked an odd question: Was President Warren Harding a member of the KKK?

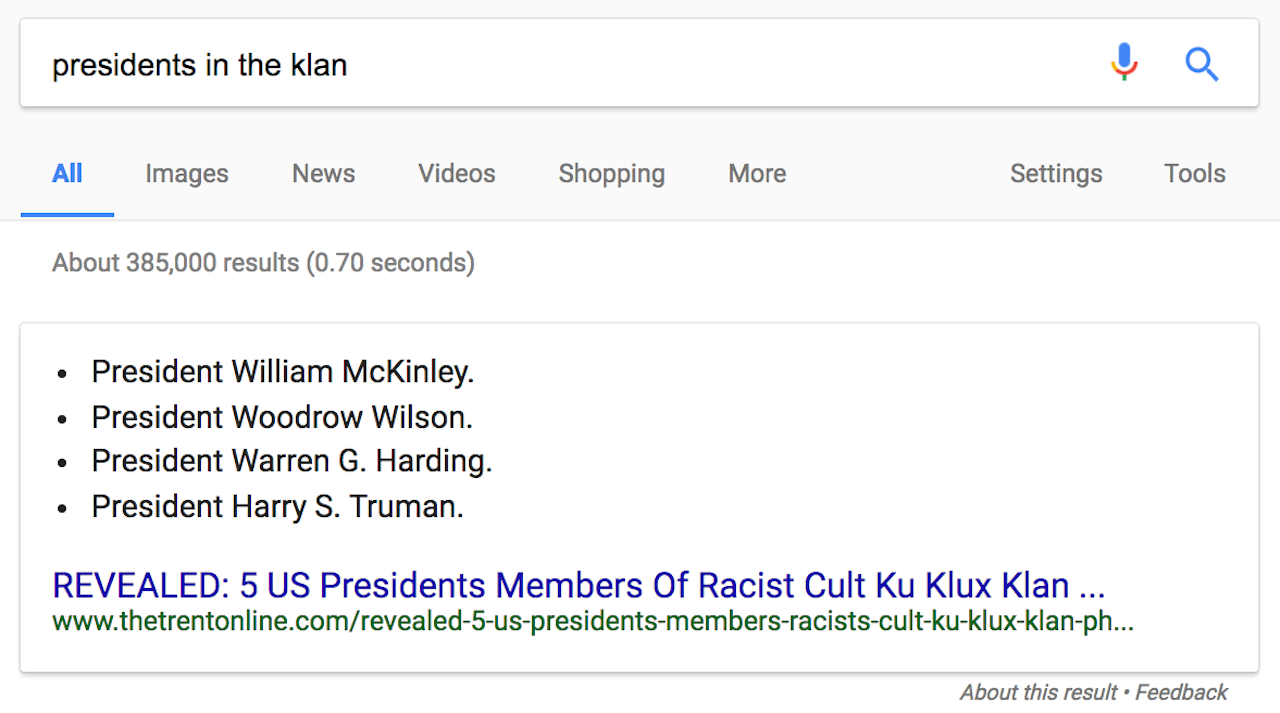

Shulman was taken aback. He confessed that he was not aware of that allegation, but that Harding had been in favor of anti-lynching legislation, so it seemed unlikely. But then a second student pulled out his phone and announced that yes, Harding had been a Klan member, and so had four other presidents. It was right there on Google, clearly emphasized inside a box at the top of the page:

For most of its history, Google did not answer questions. Users typed in what they were looking for and got a list of web pages that might contain the desired information. Google has long recognized that many people don’t want a research tool, however; they want a quick answer. Over the past five years, the company has been moving toward providing direct answers to questions along with its traditional list of relevant web pages.

Type in the name of a person and you’ll get a box with a photo and biographical data. Type in a word and you’ll get a box with a definition. Type in “When is Mother’s Day” and you’ll get a date. Type in “How to bake a cake?” and you’ll get a basic cake recipe. These are Google’s attempts to provide what Danny Sullivan, a journalist and founder of the blog SearchEngineLand, calls “the one true answer.” These answers are visually set apart, encased in a virtual box with a slight drop shadow. According to MozCast, a tool that tracks the Google algorithm, almost 20 percent of queries — based on MozCast’s sample size of 10,000 — will attempt to return one true answer.

Unfortunately, not all of these answers are actually true.

Back to Shulman and his students. The result Google was quoting came from an article in Trent Online, a site that bills itself as a “leading Internet Newspaper in Nigeria,” titled “REVEALED: 5 US Presidents Members Of Racist Cult Ku Klux Klan (PHOTOS).” On closer inspection, the Trent Online article notes that this piece originally appeared on a site called iloveblackpeople.net. The article says that William McKinley, Woodrow Wilson, Warren Harding, Calvin Coolidge, and Harry Truman were members of the KKK. The only sources cited are the Christian nationalist pseudo-historian David Barton and KKK.org.

“I understand what Google is trying to do, and it’s work that perhaps requires algorithmic aid,” Shulman said in an email. “But in this instance, the question its algorithm scoured the internet to answer is simply a poorly conceived one. There have been no presidents in the Klan.”

According to the Harding Home, a museum in Harding’s former residence, the allegation that he was a Klansman was a lie concocted by the KKK and denied by Harding. Biographers of Harding and his wife found no evidence that he was a member. Similarly, there is no reason to believe that William McKinley, Calvin Coolidge, or Woodrow Wilson were members, Shulman said. (It should be noted that Wilson was still a notorious racist.) There is evidence that Harry Truman, who was also a horrible racist, met with Klansmen — but if he was a member, he wasn’t a very active one, Shulman said, and he later opposed the Klan and allied with its enemies.

14. @Google, please, please do better. Wikipedia has plenty of problems, but it's actually a much better source of info than this garbage.

— Peter A. Shulman (@pashulman) February 23, 2017

Google needs to invest in human experts who can judge what type of queries should produce a direct answer like this, Shulman said. “Or, at least in this case, not send an algorithm in search of an answer that isn’t simply ���There is no evidence any American president has been a member of the Klan.’ It’d be great if instead of highlighting a bogus answer, it provided links to accessible, peer-reviewed scholarship.”

So how did this fiction manage to get the spot reserved for Google’s one true answer?

The push for quick answers

Many of Google’s direct answers are correct. Ask Google if vaccines cause autism, and it will tell you they do not. Ask it if jet fuel melts steel beams, and it will pull an answer from a Popular Mechanics article debunking the famous 9/11 conspiracy theory. But it’s easy to find examples of Google grabbing quick answers from shady places.

Would you trust an answer pulled from the anti-vaccine alternative health content farm Mercola.com?

How about an answer from the Facebook page of a white nationalist group in Australia?

Would you trust a system that falls for a Monty Python joke?

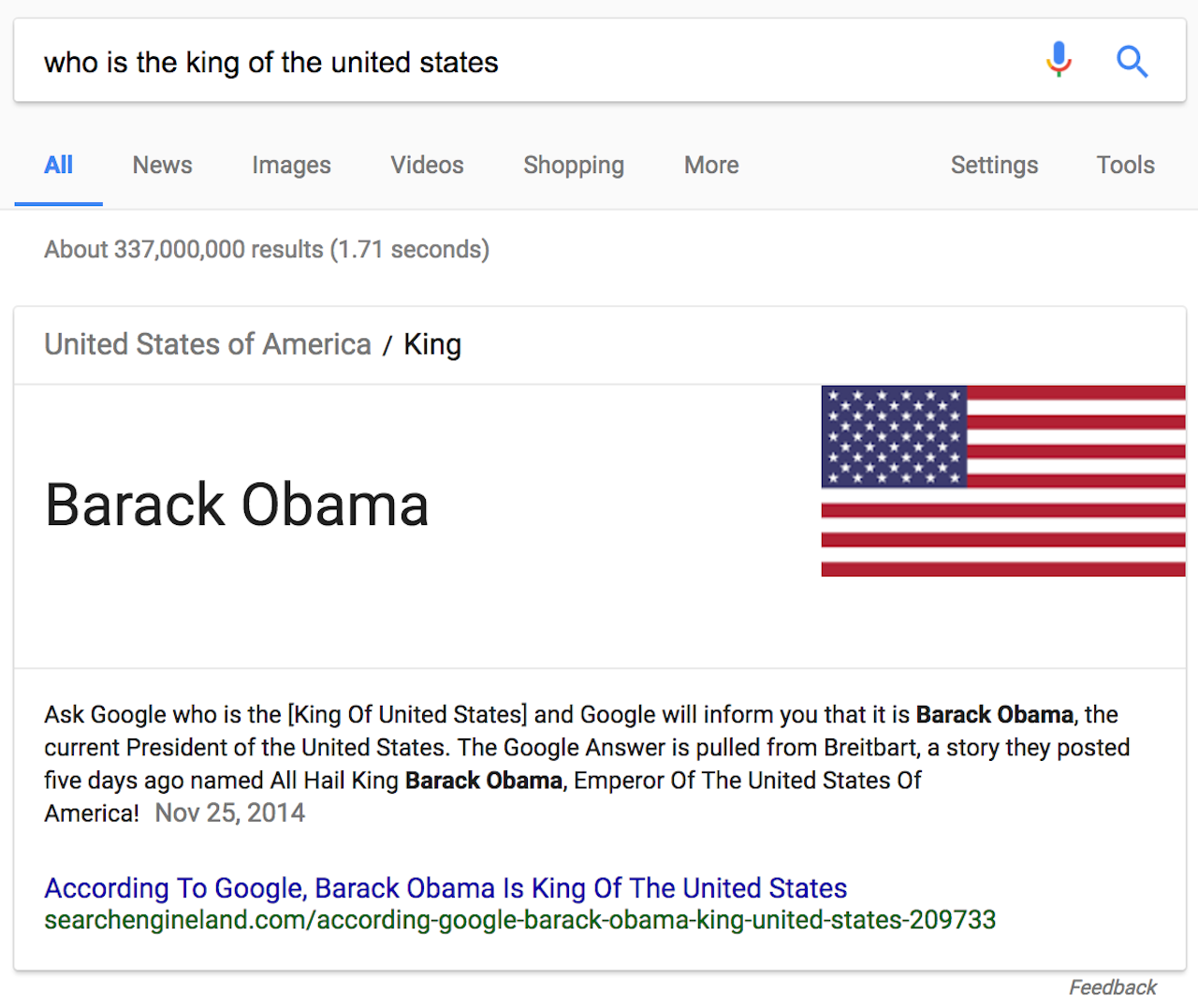

What about a system that thinks Barack Obama is the current king of America — first because of an answer sourced from a Breitbart article, and now because of an article criticizing that answer?

What about a system that thinks Obama is planning a coup d'etat?

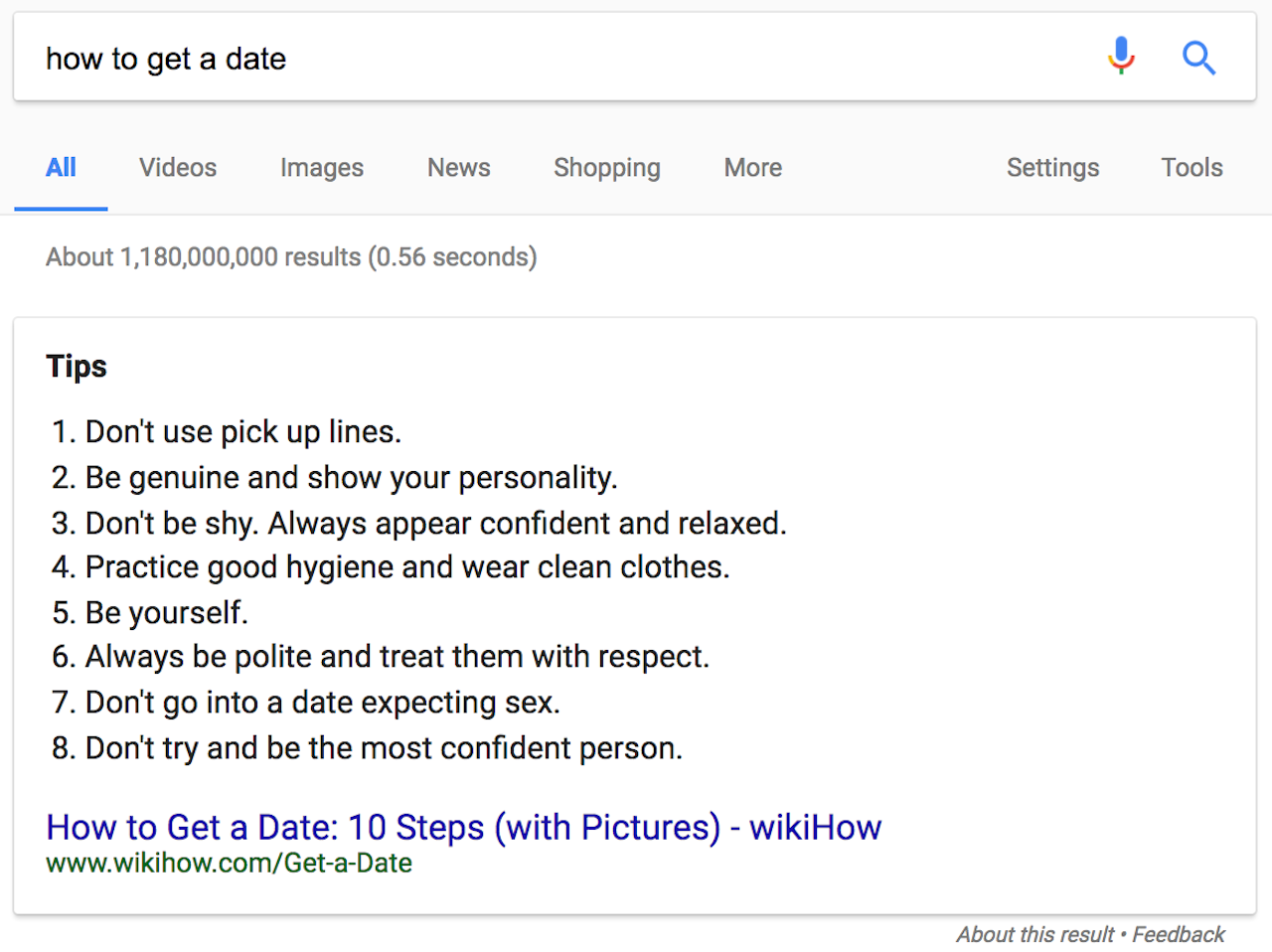

Would you trust a system designed to give one true answer to the query, “how to get a date”?

I mean, this isn’t terrible advice. But why does Google even want to deliver a single answer to such a subjective question?

Google doesn’t always get tripped up like this. Many of its answers, like the date of Mother’s Day, come from what Google calls its Knowledge Graph, a database of hard facts sourced from places like Wikipedia and CIA World Factbook. Google started implementing these in 2012, and they’re very reliable.

But a faster-growing number of quick answers, like the cake recipe and the Monty Python joke and the presidents supposedly in the Klan, are not as carefully curated. These answers are algorithmically generated from web pages that rank highly in the search results. They’re officially called “featured snippets.”

Google added featured snippets sometime in 2014, and it’s not like the company doesn’t know about this problem. In 2015, users discovered that the query “What happened to the dinosaurs?” produced the quick answer “Dinosaurs are used more than anything else to indoctrinate children and adults in the idea of millions of years of earth history,” pulled from a fundamentalist Christian page titled “What Really Happened to the Dinosaurs?” Google didn’t comment, but the featured snippet changed to a quote from the University of Illinois Extension.

In December, a writer for The Guardian noticed that asking “Are women evil?” produced this enlightening featured snippet: “Every woman has some degree of prostitute in her. Every woman has a little evil in her… Women don’t love men, they love what they can do for them. It is within reason to say women feel attraction but they cannot love men.”

Google Home giving that horrible answer to "are women evil" on Friday. Good article on issues; I'll have more later https://t.co/EUtrx4ZFulpic.twitter.com/Ec8mEqx8Am

— Danny Sullivan (@dannysullivan) December 4, 2016

Google intervened manually after the Guardian piece. “Are women evil?” now pulls up the regular list of search results with no featured snippet.

Why does Google do this?

Google has an excellent reputation, and it’s well-deserved. Mammoth forces of spammers, scammers, and other bad actors are constantly trying to manipulate Google’s search rankings, and yet they remain incredibly useful for users. Yet the company seems willing to take hits to its reputation while also promoting conspiracy theories, bigotry, and misinformation. Why?

For one thing, people really like this style of search. “It’s having a very good impact on the search results. People love them,” said Eric Enge, CEO of Stone Temple Consulting, a digital marketing agency that has done three deep-dive studies on featured snippets. In one of his company’s surveys, people said the feature they wanted most was the ability to “answer directly without having to visit another website or another app.” These quick answers are great when they’re accurate, especially if you’re on a mobile device, and they often are accurate — or at least accurate enough to satisfy the searcher.

Focusing on direct answers is also a longer-term play. The number of browser-less internet-connected devices is growing fast, and already voice-activated assistants like Amazon Echo and Google Home are penetrating the market. Google’s traditional list of search results does not translate well to voice — imagine Google Home reading you a list of 10 websites when you just want to know how many calories are in an orange.

“There’s data out there that suggests by the year 2020, which is only three years away, that 75 percent of internet-connected devices will be something other than a PC, smartphone, or tablet,” Enge said. “That 75 percent of connected devices will most likely interact by voice, and if you’re interacting by voice, and it responds in voice, you know how many answers you get? One. That’s only going to work if you have that answer.”

And here's what happens if you ask Google Home "is Obama planning a coup?" pic.twitter.com/MzmZqGOOal

— Rory Cellan-Jones (@ruskin147) March 5, 2017

The fastest way for Google to improve its featured snippets is to release them into the real world and have users interact with them. Every featured snippet comes with two links in the footnote: “About this result,” and “Feedback.” The former explains what featured snippets are, with guidelines for webmasters on how to opt out of them or optimize for them. The latter simply asks, “What do you think?” with the option to respond with “This is helpful,” “Something is missing,” “Something is wrong,” or “This isn't useful,” and a section for comments.

“It’s having a very good impact on the search results. People love them.”

Google uses this feedback form and other algorithmic signals from users to continuously improve and refine featured snippets. Snippets change a lot, Enge said — webmasters will optimize for a query, score a featured snippet, and then lose it days later. The system appears to work at least part of the time: On February 24, the query “MSG FDA” — my attempt to find the agency’s guidelines on MSG as part of fact-checking for this story — turned up a featured snippet from the MSG conspiracy theory website truthinlabeling.org. Within five days, that featured snippet had disappeared.

In theory, featured snippets will always temporarily turn up some bad answers, but the net effect would be better answers. “It’ll never be fully baked, because Google can’t tell if something is truly a fact or not,” said Danny Sullivan of Search Engine Land. “It depends on what people post on the web. And it can use all its machine learning and algorithms to make the best guess, but sometimes a guess is wrong. And when a guess is wrong, it can be spectacularly terrible.”

Again, featured snippets are often incredibly useful, especially when you need to be hands-free.

The ability to read featured snippets is also the major distinguishing characteristic between Google Home and competitors like Amazon Echo and Siri, Sullivan said. “Google sees that as a competitive advantage and they don’t want to turn it off,” he said. The problem is that even when they are wrong, the featured snippets bear Google’s highest endorsement. “Where is the tipping point where you get enough of these embarrassing answers that you decide to shut it off?”

Google has recently taken heat for its suggested queries, the autocompleted phrases that pop up when you start typing a question. However, those seem much less damaging than the promotion of bad information that is presented as Google’s one true answer. As Google attempts to combat the proliferation of fake news by banning publishers from its ad platform, it continues to disseminate fake news itself through featured snippets. Fake news typically comes from hastily built content farms. In this case, it’s coming from Google, which surveys show is trusted by around 63 percent of people, more than both social media and traditional media.

“Where is the tipping point where you get enough of these embarrassing answers that you decide to shut it off?”

“The whole experience was quite shocking,” Shulman said. “The good news is this episode turned into a teaching opportunity for my class.” Increased information literacy among users seems to be the best solution, as Google doesn’t seem to see this as a priority for now.

Google declined to answer specific questions about featured snippets, instead sending a boilerplate statement: “The Featured Snippets feature is an automatic and algorithmic match to the search query, and the content comes for third-party sites. We’re always working to improve our algorithms, and we welcome feedback on incorrect information, which users may share through the ‘Feedback’ button at the bottom right of the Featured Snippet.”